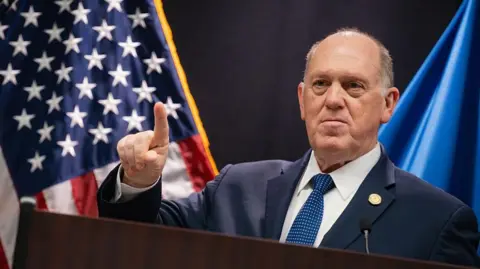

LOS ANGELES (AP) — The ongoing use of AI-generated imagery by government officials has raised significant concerns about misinformation and trustworthiness in official communications. The administration has actively engaged in sharing these images, exemplified by a recent post featuring an altered image of civil rights attorney Nekima Levy Armstrong in tears after her arrest.

The intentional alteration of an original arrest photo sparked a backlash among misinformation experts, who believe this practice could selectively distort public perception of reality and sow seeds of distrust. This instance draws attention to the growing concern regarding the blurred lines between authentic news and AI-manipulated media.

In defense of the altered imagery, White House representatives termed them as memes, dismissing criticism as an attempt at humor. Critics like David Rand, an information science professor, observed that such fabrications can mislead the public, shielding the administration from accountability.

However, experts caution that the repeated use of altered images, especially by credible entities, could crystallize misleading narratives concerning critical events, thus undermining public trust. Michael A. Spikes from Northwestern University emphasized that people rely on government channels for reliable information, and the manipulation of visuals complicates this relationship.

Ramesh Srinivasan from UCLA highlighted the broader implications, suggesting that as AI technologies proliferate, the issue of discerning truth from fiction becomes increasingly challenging for everyday citizens.

The dangers of this trend extend beyond just political discourse. Increasingly, social media platforms are inundated with AI-generated videos depicting controversial topics such as immigration enforcement. Many content creators are leveraging this content for engagement, further absurding the line between factual reporting and sensationalism.

Jeremy Carrasco, an expert in media literacy, noted concerns that many viewers may not discern reality from AI-enhanced or fabricated visuals when it matters most—such as in politically heated contexts. As AI technology continues to evolve, experts like Carrasco advocate for the implementation of watermarking systems to establish the credibility of media being disseminated.

The ongoing discussions around AI’s impact on public perception and trust highlight the ever-pressing need for transparency and truth in government communications.